Soup Bot: A Slack Bot That Scrapes Specials Menus From Instagram

There is a sandwich place near my work that I visit frequently for lunch. They have an Instagram where they post their daily specials menu which is convenient since I can see what the specials are before heading over. However in some form of twisted sloath I wanted to be directly told whenever there was a special menu update. Thus Soup Bot was born.

The goal was simple: check for new posts on Instagram and if the new post is a specials board (and not some other photo) send a message over Slack. The anticipated plan was:

- Scrape all the posted images from the Instagram profile @davesfreshpasta and seperate images of the specials board from the rest.

- Retrain a Tensorflow image classifier to learn the new categories of Soup and Not Soup.

- Create a script that will

- Check for new posts and classify them.

- If the new post is Soup, send a message to slack

- Find a cheap and simple way to deploy the whole thing (I ended up going with AWS Lambda)

- Get soup on the days where there are specials that I like.

Scraping the Training Corpus

In the beginning I was just toying with the idea of soup bot so rather than script the process of downloading all the images I used a browser extension to download all ~7500 images from their Instagram profile and then sorted them by hand. This seemed reasonable at the time since it was a one time process. Classification results for this initial corpus have seemed good enough so far that I'm not sure if I will ever end up downloading new images and retraining.

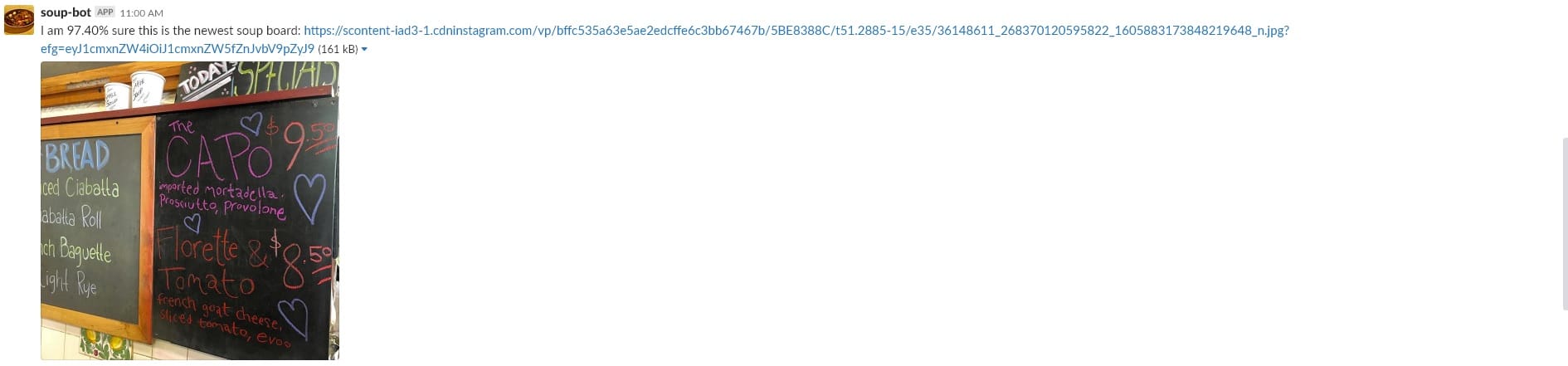

Here is an example of an image that I want to categorize as a specials board:

Here is an example of an image that should be the "not soup" category:

Classification

In order to tell which photos were specials boards and which were not I need to train an image classifier. Thankfully Tensorflow has a retraining script with great documentation for retraining an existing ImageNet classier. After an original test with the default retraining setting I switched from using the regular ImageNet classifier to a MobileNet classifier to cut operating costs. I dubbed it the the Mobile Soup classifier. It resulted in a cool savings of:

- ~10x reduction model size. 85mb to 8.9mb (important since AWS Lambda constrains the size of your deployment bundle)

- ~2x increase run time speed. cProfile in Pycharm showed a ~6800ms down to ~3100ms for the total runtime of the tensorflow method calls.

- ~3x reduction memory usage (this metric is mostly eyeballed...)

- a relatively small loss in accuracy (that I have not put the time in to quantify). Luckily I only need to differentiate between two classes of images so accuracy has been very good (positive classifications are typically greater than 98%).

The Script

Oddly enough two weeks before I started development Instagram deprecated the official APIs methods I could have used. Luckily nature finds a way and there are existing libraries like Instalooter that work off scraping pages rather than using the official APIs. The library makes its easy to fetch posts over a specific time range.

I keep track of the fetched images and the classifications using a standard setup with SQLAlchemy. Local testing runs against a SQLite database. The Lambda methods on AWS uses a PostgreSQL RDS instance (the tiniest one they have).

The final part was Slack integration. Originally I was going to go with a whole subscription model where people could ask to receive updates and then be notified with private messages but a coworker pointed out I could just have a channel that the updates get posted to which people could subscribe (and thats much easier to do). I used the library Slacker to handle the integration.

Deploying

To save time with deploying I went with Serverless to ease configuring AWS lambda. Additionally since the dependencies are quite large (we need to include tensorflow and all its dependencies) and they need to be included in the deployed bundle I used the Serverless Python Requirements plugin to help with bundling and compressing the dependencies (especially useful for getting the correct tensorflow/numpy binaries in the bundle). The main complications I ran into when setting up AWS were around VPC configuration but others before me have resolved the issues before.

Finally CloudWatch was used to schedule events to kick off the lambda function, and to start and stop the RDS instance in order to reduce costs.

Update 6/3/2018:

So it turns out NAT Gateways are quite pricey (~$30 a month). It seems other people have come to this realization as well. As an alternative I've replaced the NAT Gateway with a scheduled NAT Instance on EC2 using the images provided by AWS. Finally for additional cost savings I'm using another set of scheduled lambdas to start and stop the EC2 instance.

Update 6/30/2018:

Another caveat I found while monitoring the billing dashboard: AWS will not charge you for an Elastic IP associated with a running EC2 instance. However if you keep an EIP associated with a stopped EC2 instance then you will be charged (around $3 a month) to incentive people not to horde IP addresses. Details on EIP billing works.

Rather than creating a third set of lambdas to handle the allocation/association and deallocation/disassociation I instead dropped the original lambdas and created a new single Python 3.6 lambda to handle starting up and shutting down. Additionally I started the process of moving all the informal AWS configuration I had manually configured into the serverless configuration.

Update 8/16/2018

I've migrated Soup Bot to use Aurora Serverless. Details are available in another post.

Conclusion

So in the end was it worth it? Probably not. Was it enjoyable? Certainly.

Member discussion