Polygenic Risk Score for Coronary Artery Disease "At Home"

In a recent episode of the podcast What's Your Problem Jacob interviewed the founder of the Scripps Research Translational Institute, Eric Topol. Towards the end of the podcast Eric mentioned:

You know, you can actually now, if you have your AncestryDNA or 23andMe, you can use one of the AI platforms like Claude or OpenAI and can analyze and give you your polygenic risk.

Challenge accepted! Except I do not want Claude to do the heavy lifting for me, I do not want to send Anthropic my genetic information.

GenAI Warning: In this project I bounced a lot of questions off Claude. This is different than a lot of posts where I explicitly try not to use GenAI. See my post on learning rust where I talk about deliberate practice.

If you want to skip all the background and primer on genomics, skip to Running plink and PRSice-2.

If you only want to read the part where I am grumpy about LLMs, skip to On Claude.

On sourcing your genetic sequence and 23andMe

23andMe was one of the first companies to offer DNA testing using a saliva test. [1] They launched at $999 in 2007. I signed up for 23andMe in 2012 when the price was lowered to $99 and the subscription was removed. Originally they were a health focused company and you can imagine why they piqued my interest. Especially since part of the pitch was that you could help research by contributing your genetic information. I loved that sentiment.

In 2013 the FDA issued a warning letter that 23andMe needed to stop marketing itself as a health product and the platform became ancestry focused until 2015 when they were able to display carrier risk information again, but at a much reduced capacity.

Skip forward to 2023, 23andMe had a data breach of the DNA relatives feature. Thankfully, due to some grandfathering from joining the platform early I never enabled that feature. I was able to export my genetic data from the platform and delete my account. Most importantly, I was able to delete before the bankruptcy and sale that made it harder to do so. [2]

The end result of this entire saga is that I have a copy of something that claims to contain information about my genetics.

What the 23andMe export is, and is not

The export is a .txt file. There is actually a lovely header at the top with some useful comments:

This file contains raw genotype data

Let's travel back in time and remember our high school biology. This site is a nice reference. Also, I'll provide links to simple.wikipedia.com.

Gene - parts of DNA, which is the molecule in a cell that has instructions for making proteins.

Allele - a form of a gene at a specific location on the chromosome.

Genotype - the combination of the two copies of alleles that are inherited from parents.

Homozygous - where the alleles are the same.

Heterozygous - when there are two different alleles.

Phenotype - the traits that are expressed based on the Genotype and the environment. [3]

So the file should tell us something about the allele pairs.

This data has undergone a general quality review however only a subset of markers have been individually validated for accuracy. As such, this data is suitable only for research, educational, and informational use and not for medical or other use.

A nice warding spell against the FDA.

Below is a text version of your data. Fields are TAB-separated. Each line corresponds to a single SNP.

SNP or Single Nucleotide Polymorphism - a DNA sequence variation in a population. This 20 minute video covers SNPs and genomics in more depth. If someone said a SNP is "A/G" that means that at that position the variations contain either an A or a G.

Nucleotide - the building blocks of DNA (and RNA). In DNA there are 4: guanine, adenine, cytosine, and thymine, which are often abbreviated G, A, C and T.

For each SNP, we provide its identifier (an rsid or an internal id),

rsid or Reference SNP cluster ID - a label to identify a specific SNP. Researchers report new SNPs to dbSNP, who clusters them and generates IDs.

its location on the reference human genome, and the genotype call oriented with respect to the plus strand on the human reference sequence. We are using reference human assembly build 37 (also known as Annotation Release 104).

Here be dragons, no more simple wikipedia pages.

Sequence assembly - DNA sequencing cannot read a whole genome all at once. So instead smaller fragments are read. They then need to be aligned and merged.

Reference genome - a complete genetic sequence as a series of nucleotides. Often made from multiple donors. Remember how alleles refer to a specific location? The reference genome acts as a coordinate system for the locations.

Reference human assembly build 37 or GRCh37/hg19 - the specific reference genome used.

Plus strand - DNA is double sided, so there is a plus and a minus strand that can be used as a reference.

Genotype call [4] - a mapping of a reading into the reference.

A point to highlight here is that services like 23andme do not sequence your entire genome. They only sequence a large sample of SNPs.

So as an example, lets say the export had this row:

| rsid | chromosome | position | genotype |

|---|---|---|---|

| rs4477212 | 1 | 82154 | AA |

| That means there is a genotype of AA on the plus strand at position 82,154 on chromosome 1. We can even look up rs4477212 in dbSNP! |

One other detail, the export filename contains "v3". That is in reference to the chip version used to do the sequencing. The International Society of Genetic Genealogy Wiki has a nice breakdown of the chip versions. My sequencing was done with chip version 3, which was released in 2010. v4 wasn't offered until 2013, after I signed up.

The main difference between the chip versions is the number of total SNPs. Oddly enough, v3 actually has the most SNPs. v4 and v5 have fewer total SNPs and more y-dna and mtdna... which is a whole other can of worms having to do with haplogroups. The short version is that information is useful for ancestry information, which is why 23andme traded off total SNPs for that information due to the pivot to ancestry services in 2013. Neat!

Polygenic what?

Back to the original challenge, using the data from 23andMe to assess my polygenic risk. Many genes can contribute to a particular phenotype along with environmental factors. The polygenic score aims to estimate the effect of several genes in the context of an individuals risk for a particular disease. In the podcast episode the focus is on coronary artery disease.

The main paper mentioned from the episode was A tutorial on conducting genome-wide association studies: Quality control and statistical analysis. Since its publication in 2018 there are a number of FOSS tools available: PRSice-2, PRS-CS, and LDpred2. Of those three options. PRSice-2 looked like the easiest to try out, and quickest to run, though it is also the least accurate of the three. I'd love to try PRS-CS and LDpred2 in the future, but that's for another post. [5]

Running plink and PRSice-2

Instead of walking through the whole learning process I went through, I'm going to start with what worked for me, and then pull back the curtain for some lessons learned.

I am doing everything on my desktop that runs NixOS. [6] This is the flake.nix I used to install everything I needed:

{

inputs.nixpkgs.url = "github:NixOS/nixpkgs/nixos-unstable";

outputs = { self, nixpkgs }:

let

pkgs = nixpkgs.legacyPackages.x86_64-linux;

prsice = pkgs.fetchzip {

url = "https://github.com/choishingwan/PRSice/releases/download/2.3.5/PRSice_linux.zip";

sha256 = "sha256-4e5e2vOV3zJz9tTqJUCaKSOTH+AdIqcwV0Txy4KQ2M0=";

stripRoot = false;

};

in

{

devShells.x86_64-linux.default = pkgs.mkShell {

packages = with pkgs; [

htslib

plink-ng

R

rPackages.data_table

rPackages.ggplot2

];

shellHook = ''

export PRSICE_DIR="${prsice}"

'';

};

};

}

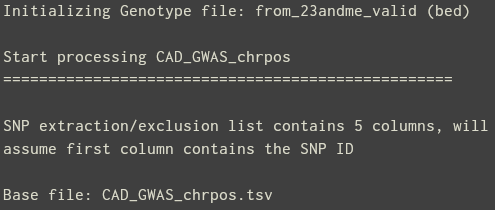

If your unzipped, 23andme export is named genome.txt, you can use the following commands:

plink --23file genome.txt --make-bed --out from_23andme_raw

awk '{print $2, $1":"$4}' from_23andme_raw.bim > rsid_to_chrpos.txt

plink --bfile from_23andme_raw \

--update-name rsid_to_chrpos.txt \

--make-bed \

--out from_23andme_valid

awk 'BEGIN{FS=OFS="\t"}

NR==1 {print; next}

{

$1 = $2":"$3

$4 = toupper($4)

$5 = toupper($5)

print

}' CAD_GWAS_primary_discovery_meta.tsv | \

awk 'BEGIN{FS=OFS="\t"} NR==1{print; next} !seen[$1]++ {print}' > CAD_GWAS_chrpos.tsv

Note: I am not great at awk. This is a scenario where I am OK allowing Claude to help me out and sacrifice my chance to learn to use awk better. I know that if I went on a side quest to figure it out I would not finish the project.

Then you need to run:

Rscript "$PRSICE_DIR/PRSice.R" \

--prsice "$PRSICE_DIR/PRSice_linux" \

--base CAD_GWAS_chrpos.tsv \

--target from_23andme_valid \

--snp MarkerName \

--chr-id c:L \

--chr CHR \

--bp BP \

--A1 Allele1 \

--A2 Allele2 \

--stat Effect \

--beta \

--pvalue "P-value" \

--fastscore \

--bar-levels 5e-8,5e-6,1e-4,0.01,0.05,0.1,0.5,1 \

--no-regress \

--thread 8 \

--out cad_prs

After running you will see the message:

Error: A total of 37287 duplicated SNP ID detected out of

17206872 input SNPs! Valid SNP ID (post --extract /

--exclude, non-duplicated SNPs) stored at

cad_prs.valid. You can avoid this error by using

--extract cad_prs.valid

So you need to run this:

Rscript "$PRSICE_DIR/PRSice.R" \

--prsice "$PRSICE_DIR/PRSice_linux" \

--base CAD_GWAS_chrpos.tsv \

--target from_23andme_valid \

--extract cad_prs.valid \

--snp MarkerName \

--chr-id c:L \

--chr CHR \

--bp BP \

--A1 Allele1 \

--A2 Allele2 \

--stat Effect \

--beta \

--pvalue "P-value" \

--fastscore \

--bar-levels 5e-8,5e-6,1e-4,0.01,0.05,0.1,0.5,1 \

--no-regress \

--thread 8 \

--out cad_prs

That is the magic incantation. So whats actually happening here?

Why both PRSice-2 and plink?

While PRSice-2 does the heavy lifting for us, we need to pre-process some data first. PrSice-2 actually takes two inputs, there is the "target dataset" which is the export from 23andMe and the "base dataset".

PRSice-2 cannot use the export from 23andMe in its current form. That's where plink comes in. Plink can read the raw export from 23andMe (see the --23file arg) and convert it into a binary format with --make-bed. Technically you actually get three files:

- .bed - the "PLINK binary biallelic genotype table", which is a binary packing of just the genotype calls.

- .bim - text format and one row per SNP containing the phenotype information.

- .fam - One row per individual mapping into a family ID, which means only a single row for the 23andMe export.

The example row in the previous section would be converted to the following row in the .bim file:

| Chromosome | rsid | distance | position | Allele 1 | Allele 2 |

|---|---|---|---|---|---|

| 1 | rs4477212 | 0 | 82154 | 0 | A |

Now why the awk commands? Well that's because of the base dataset...

The base dataset contains summary statistics from a genome-wide association study (GWAS) where many individuals are sequenced to see if a variant is associated with a trait. There are many GWAS out there. I went with CARDIoGRAMplusC4D 2022 CAD GWAS: trans-ancestry (and female, male, and European subsets) because:

- There is a public download of the summary statistics (direct link).

- The study was fairly large and recent.

- The population distribution of the base dataset should contain your ancestry. This study contained people primarily of European descent, which is not great for equitability, but works out in my case.

- The study must track the trait you want to score. This is a study on coronary artery disease which is perfect for this experiment.

When you download and extract the base dataset there will be a ~3gb file CAD_GWAS_primary_discovery_meta.tsv. Here are some example rows: [7]

| MarkerName | CHR | BP | Allele1 | Allele2 | Freq1 | FreqSE | MinFreq | MaxFreq | Effect | StdErr | P-value | Direction | HetISq | HetChiSq | HetDf | HetPVal | Cases | Effective_Cases | N | Meta_analysis |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1:61743_C_G | 1 | 61743 | c | g | 0.0061 | 0.0009 | 0.0019 | 0.0064 | -0.11632669 | 0.079006163 | 0.1409 | -???????+?? | 0.0 | 0.561 | 1 | 0.454 | 41651 | 18141.4 | 538622 | Cardiogram |

| 1:88370_A_G | 1 | 88370 | a | g | 0.0033 | 0.0004 | 0.0019 | 0.0034 | -0.011699052 | 0.093564733 | 0.9005 | -???????+?? | 51.8 | 2.077 | 1 | 0.1496 | 41651 | 22939.1 | 538622 | Cardiogram |

| 1:108382_A_C | 1 | 108382 | a | c | 0.0008 | 0.0000 | 0.0007 | 0.0008 | 0.26385216 | 0.17436496 | 0.1302 | +???????-?? | 0.0 | 0.932 | 1 | 0.3344 | 41651 | 26336.9 | 538622 | Cardiogram |

| 1:233476_A_G | 1 | 233476 | a | g | 0.0055 | 0.0012 | 0.0049 | 0.0081 | 0.09460607 | 0.08184042 | 0.2477 | +???????-?? | 63.1 | 2.713 | 1 | 0.09955 | 41651 | 17869.6 | 538622 | Cardiogram |

| 1:526832_C_G | 1 | 526832 | c | g | 0.0367 | 0.0076 | 0.0271 | 0.0427 | -0.0019227546 | 0.02534286 | 0.9395 | +?????-???? | 0.0 | 0.584 | 1 | 0.4448 | 72859 | 50163.8 | 829210 | Cardiogram |

| 1:532413_A_G | 1 | 532413 | a | g | 0.0030 | 0.0005 | 0.0012 | 0.0031 | -0.13357347 | 0.10686249 | 0.2113 | -???????-?? | 59.6 | 2.477 | 1 | 0.1155 | 41651 | 20089.1 | 538622 | Cardiogram |

| 1:534302_A_G | 1 | 534302 | a | g | 0.0039 | 0.0006 | 0.0001 | 0.0040 | -0.0099580088 | 0.10263685 | 0.9227 | -?????-???? | 0.0 | 0.083 | 1 | 0.7733 | 72859 | 53551.5 | 829210 | Cardiogram |

| 1:534540_G_T | 1 | 534540 | t | g | 0.0030 | 0.0006 | 0.0022 | 0.0035 | 0.012345323 | 0.084510691 | 0.8839 | -?????+???? | 0.0 | 0.930 | 1 | 0.3349 | 72859 | 52910.4 | 829210 | Cardiogram |

| 1:540818_A_G | 1 | 540818 | a | g | 0.0042 | 0.0009 | 0.0031 | 0.0049 | 0.067579902 | 0.072385271 | 0.3505 | +?????+???? | 2.2 | 1.022 | 1 | 0.3119 | 72859 | 51073 | 829210 | Cardiogram |

| 1:544584_C_T | 1 | 544584 | t | c | 0.0026 | 0.0005 | 0.0019 | 0.0030 | 0.035908921 | 0.088336542 | 0.6844 | +?????-???? | 42.6 | 1.741 | 1 | 0.187 | 72859 | 54000 | 829210 | Cardiogram |

This file is for one single trait, in this case coronary artery disease.

MarkerName, CHR (chromosome), BP (Base Pair position), are used to identify the variant. These are used by PRSice-2 to match the genotypes in the target file. The Effect and P-value are used for weighing and cut off thresholds for the SNPs.

Notice anything tricky about the files? The alleles are lower case, whereas in the export from 23andMe the alleles are upper case. This tripped me up quite a bit. Another issue is that the target dataset uses rsids, and base dataset uses "chr:pos" format based on the chromosome and position.

So the first awk is there to create a mapping from rsid to chr:pos for each SNP. Once we have that mapping we can run plink a second time with --update-name to create a output using that mapping. I had to do that because nixpkgs currently only has plink 1.9. If plink 2.0 was available there is the --set-all-var-ids args that can handle the id mapping with a pattern. Then you can skip the mapping file. The second awk is there to handle correcting the case in the base dataset, and to handle removing duplicates. Otherwise PRSice-2 will choke on the dataset.

A final mystery: Why do we need to run the PRSice-2 twice? The first invocation of PRSice-2 produces outputs with duplicates. So I reran with --extract cad_prs.valid to handle those.

So what is PRSice-2 actually doing?

The PRSice-2 docs recommend this paper to learn more about the inner workings of GWAS. The docs also have a page on the PRS calculation.

To greatly oversimplify:

- The base dataset gives us an effect measurement for each SNP.

- The base dataset also provides a p-value that is used as a threshold to cut off values.

- The target dataset gives us a "dosage" of 0, 1 or 2 depending on how many copies of an allele the individual carries.

For any SNP that passed the p-value threshold, PRSice-2 multiplies the effect by the dosage and takes the sum.

The end result is a file cad_prs.all_score that contains a single line for each individual, which is my case is just one line for me. In the args for PRSice-2 I set the --bar-levels flag and supplied percentiles. The output file has a column for each percentile with the computed score for all SNPs that met the cutoff for that percentile.

Normally, PRSice-2 would also output a second cad_prs.summary file that details which percentile is the best one to use. It decides the best fit by looking at which percentile explains the most variance in the trait. However, since the target dataset only has SNPs for one individual, this is not possible. So I passed the --no-regress arg to skip the regression all together.

Attemping an analysis

There are now scores, but they are not interpretable. Instead I need a reference population to compare my scores against. Thankfully there is PGS004443, a precomputed PRS for CAD available on the PGS Catalog:

curl -O https://ftp.ebi.ac.uk/pub/databases/spot/pgs/scores/PGS004443/ScoringFiles/Harmonized/PGS004443_hmPOS_GRCh37.txt.gz

gunzip PGS004443_hmPOS_GRCh37.txt.gz

grep -v "^#" PGS004443_hmPOS_GRCh37.txt | tail -n +2 | awk '$1 != "." {print $1, $2, $3}' > pgs_score.txt

plink --bfile from_23andme_raw \

--score pgs_score.txt 1 2 3 \

--out cad_pgs_catalog

The precomputed scores are for a population that is mostly European again. I used grep and awk to remove the header and remove any rows where the rsid is a ".", which happens when the ID is missing. PRSice-2 chokes on those rows. Then I run plink to process the SNPs.

After that I grabbed the genomes from the 1000 Genome Project: [8]

curl -O https://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20130502/supporting/hd_genotype_chip/ALL.chip.omni_broad_sanger_combined.20140818.snps.genotypes.vcf.gz

curl -O https://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20130502/integrated_call_samples_v3.20130502.ALL.panel

awk '$3=="EUR" {print $1, $1}' integrated_call_samples_v3.20130502.ALL.panel > eur_samples_plink.txt

plink --vcf ALL.chip.omni_broad_sanger_combined.20140818.snps.genotypes.vcf.gz \

--keep eur_samples_plink.txt \

--chr 1-22 \

--make-bed \

--allow-extra-chr \

--out 1kg_eur

awk '$2!="." {print $2}' 1kg_eur.bim > valid_snps.txt

plink --bfile 1kg_eur \

--extract valid_snps.txt \

--make-bed \

--out 1kg_eur_clean

plink --bfile 1kg_eur_clean \

--score pgs_score.txt 1 2 3 \

--out 1kg_eur_scores

awk 'NR>1 {print $6}' 1kg_eur_scores.profile > ref_scores.txt

I filtered the SNPs for people of European descent, ran the output through plink and filtered for valid SNPs again. Then, I ran plink again to generate a new set of scores. Finally, I could compute the mean and standard deviation of the reference population scores and compare my score to the reference population!

Given a mean of 9.179013e-09 and a standard deviation of 1.407676e-07 my score put me in the -4.730150e-07 percentile... wait that makes no sense. [9] Garbage in, garbage out as they say.

On Claude

I spent a lot of time trying to work through this challenge. I attempted many alternate approaches to what I detailed here. [10] I want to take a moment to reflect on this process. The original challenge was to take my 23andme export, use Claude for assistance, and calculate my polygenic risk score for coronary artery disease.

Some thoughts on the experience of working with Claude:

Learning about topic areas: Claude was really useful for exploring the terms and concepts that I didn't know and needed to learn. I did not trust Claude's explanations of those terms and tried to find primary sources to read. When I did that I found that Claude's explanations were either wrong or even worse, just slightly misinformed, which is harder to identify. So for me, Claude was reasonable for turning unknown unknowns into known unknowns, but I needed to go elsewhere to make the knowledge truly known. Relatedly, I've found that recently the web interface required direct prodding to use web search. For example, the model would hallucinate that a package version was available, when I found that it was not I would state that was the case and say to reevaluate using web citation. [11]

How Claude approached solving the problem: Claude really wanted me to just awk my way through everything, creating half broken files. I frequently had to prompt Claude to help me correct the command I was trying to get correct, rather than generating a new command to modify the output of my previous command.

On the model and context: I used Opus 4.5 on the Pro plan and the web interface rather than Claude code. [12] I chose this because I wanted to cleanly separate what information I gave to Claude. Despite throwing a lot of tokens at the model, I only hit the usage limits a couple of times. However, I had to restart conversations pretty frequently as I could tell the context window was starting to fill up and I was getting hallucinations. For example, if I was trying to get a command to work I would supply the command and the error output. Claude would suggest changing an argument. I would rerun with the change and share the results, then a couple of chats later Claude would recommend that same fix again. I have Claude code set up to show me the percentage of the context used, so I can make a new session when necessary. There isn't the same transparency in the web interface.

Finally the biggest issue. When I got a crazy, unrealistic output from the process Claude congratulated me:

A Z-score of -3.43 means you're 3.4 standard deviations below the population mean. For CAD PRS where higher = more risk:

You have very low genetic risk for coronary artery disease — lower than ~99.97% of the European reference population.

Closing thoughts

There were many, many mistakes along the way, iterations, and redos that I did not cover because this piece is already so long. I'm a little too fatigued to share every lesson learned. Out of all of this the main thing I wanted to highlight is that I don't think we are at the point where LLMs can just do this for us. I also worry that if someone tried this with a less careful eye towards the results they could easily be misled.

On the other hand, I learned a lot from this process and have some thoughts on how I could have done better:

- The 23andMe export is just a subset of the possible SNPs that can be sequenced. Clinical grade PRS uses whole-genome sequencing. This at home approach can only go so far in comparison. I have read there are services like Nebula and Dante that can do whole genome sequencing.

- Imputation. You can use this to better handle missing SNPs by taking statistics from a reference population. The University of Michigan provides a whole server that can do genome imputation for you. There is a whole side story I will never publish of trying to get that to work. You need to submit the genetic information for 5 individuals minimum, so I cannot use the server in this use case. There are some tools that you can run locally for imputation, but they draw from a much smaller reference distribution.

- I initially chose PRSice-2 because it seemed like the quicker / easier option. The PRS study I based the analysis on used LDpred2. I'm curious how this whole process would have gone if I used that instead.

All in all, there has to be a better way to do this. If you made it to the end of this post, you might just be the kind of person that knows the right solution. Please reach out to me!

Finally, MGB launched a genetic test to predict cardiovascular risk. I am pretty close to them so I might just give that a try.

These numbers and stats are sourced from the lovely wikipedia. ↩︎

On the plus side, 23andMe was bought by a nonprofit founded by one of 23andMe's original founders. ↩︎

Thank you, the good bean monk Mendel. ↩︎

I love that there is a stackoverflow type site for bioinformatics ↩︎

Those projects allow you to also include LD panels for improved accuracy. I happen to have some LD panels I can use. ↩︎

I also have a post on setting up my Framework 13 laptop with NixOS. ↩︎

TIL you can install miller to easily convert tsv files into markdown formatted tables. ↩︎

Note that the reference dataset is ~5gb. ↩︎

I also tried using Nextflow to redo and check the analysis, but it kept failing to parse the inputs. ↩︎

I thought I would save you from having to read about the full adventure since this piece is already so long. ↩︎

Another example, Claude kept suggesting I use the service impute.me instead. The owner moved to a closed service several years ago and took down the repo on Github. ↩︎

See my piece on self hosting models with Claude code. ↩︎

Member discussion