NeurIPS 2025 - Wednesday Notes

Updated 2025-12-19: I've moved any metacommentary about the conference into a retrospective post.

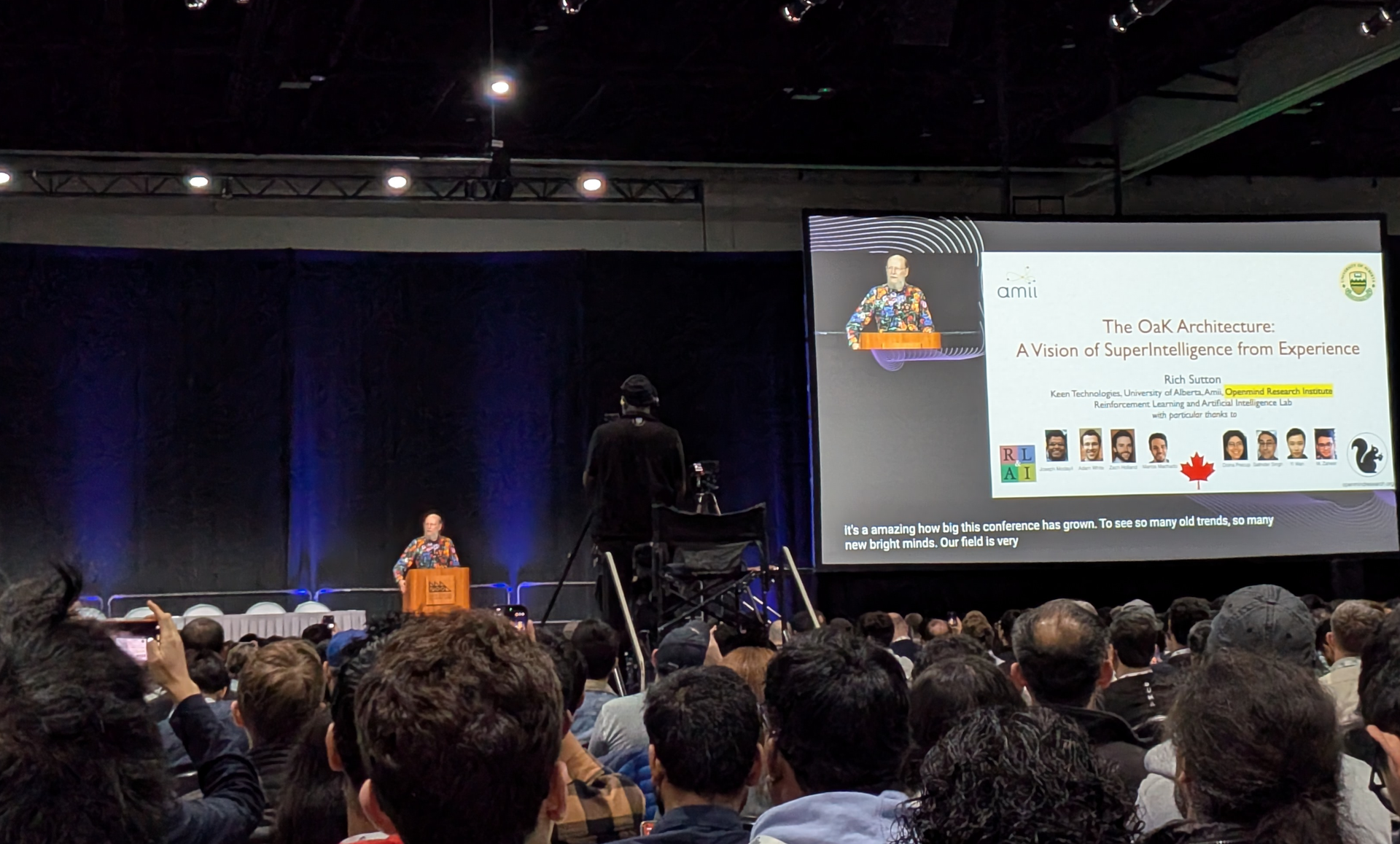

Invited talk: The Oak Architecture: A Vision of SuperIntelligence from Experience by Rich Sutton

First up in the morning was an invited talked. I enjoyed starting the day with something more abstract and philosophical. To quote the speaker:

"I'm not going to share any results today."

Instead, he talked about how with all our successes in this field, we might lose sight of our goal. We should make sure to focus on all attributes of intelligence, not just a narrow version. As he phrased it, "a vision of super-intelligence without bitterness".

To him, the "bitter lesson" is an observation based off history of the field that models shouldn't depend on human input. The world is big and we need a model that can grow at run time. There should be nothing domain dependent that is built into the model. Agents only approximate the world, nothing is exactly correct or optimal. So they must be able to form new abstractions at runtime.

Professor Sutton described himself as "not a contrarian, but a classicist" who is looking for a consensus point of view. He shared this really cool table where he tried to compare different terminology across fields:

| Reinforcement Learning / AI | agent | environment | action | observation | reward, goal | value function | state-update function | policy | world model, knowledge | state representation |

|---|---|---|---|---|---|---|---|---|---|---|

| Psychology / Neuroscience | subject, organism | world | response | stimulus | reward | secondary reinforcement | perception | stimulus-response association | world model | situation |

| Control Theory / OR | controller | system, plant | control | input | payoff, gain, cost | value function | state estimator | control law | system model | state |

| Economics / Philosophy | person, agent, company | world | choice | sensation | payoff, goal | utility, value system, ethics | perception | decision rule | knowledge | state |

| "consensus" terminology | agent | world | action | observation | reward | value function | perception | reactive policy | transition model | subjective state |

| [1] |

He set up this "concensus" terminology so he could describe Objects and Knowledge (OaK). He designed it to get past the one step trap: thinking one step predictions are sufficient and that long term predictions can be made by many iterations of individual steps.

Instead, OaK is based on the insight that:

- Every feature has a corresponding sub problem.

- If an agent can generate new features, then it can create sub problems.

OaK is a vision to a shallow "seed" super-intelligence. However we cannot get there until we develop reliable deep continual learning and meta learning first.

Oral Session: Oral 1A Language Model 1

During this block there were a couple different talks I wanted to go to, but they were in different rooms. You can run around between sessions if you want, but the timing is so tight you'll likely miss parts of the talk. I wanted to attend this sessions because the second talk won a best paper award. The three talks were:

Understanding and Mitigating Numerical Sources of Nondeterminism in LLM Inference

- Floating point math is not associative.

- The order of operations during training and inference is non-deterministic. Both batch size and GPU count can also impact the ordering.

- This gets even worse at lower precision.

- Mixure of Experts is particularly vulnerable to this due to both routing and low precision.

Accuracy typically varies ~1-2% from this non-determinism, but can be as high as 9%. So you need about a 4% improvement during evaluation to really feel confident.

You can use Batch Invariant kernels and Tensor Parallel Invariant Kernels to get around this.

Artificial Hivemind: The Open-Ended Homogeneity of Language Models (and Beyond)

- How well do LLMs handle open-ended queries?

- They classified a collection of language model queries as open-ended by using a model-as-a-judge.

- Using their Infinity-Chat, they do a large scale study on model collapse.

- Found intra-model repetition and inter-model homogeneity.

SAVVY: Spatial Awareness via Audio-Visual LLMs through Seeing and Hearing

- Spacial audio can provide important context about the world to multi-modal language models.

- The authors made a benchmark by gathering scene recordings with Project Aria glasses.

- Most major models today compress stereo audio into mono and because of that do poorly on the benchmark.

- Two terms I didn't know from the spacial processing field:

- allocentric - understanding the relative locations of things in the world

- egocentric - understanding things in the world relative to the agents own position and direction.

This is a great example of why its nice to stay in a session sometimes and give all the talks a listen. The first and third talks were not ones I would have picked out from the list, but I ended up liking them more than the second talk that drew me to that session.

Workshop: On Device/Edge AI by the PyTorch Foundation folks

A "packed" schedule:

- LFM2- Designing the next-generation foundation model architecture for edge AI | Matthias Lecher (Liquid AI)

- Leveraging ExecuTorch as a platform for mixed reality at scale | Andrey Tovchigrechko (Meta)

- Realtime reasoning at the edge | Varun Khare (Nimble Edge)

- Bendable non-silicon RISC-V microprocessor | Vijay Janapa Reddi (Harvard)

- Mamba and SSM running at the edge | Karan Goel (Cartesia)

- Unlocking AI on the Edge for PyTorch Developers | Felix Baum (Qualcomm)

- What's next in LM Studio: customize local models with Inference Lifecycle Plugins | Yagil Burowski (LMStudio)

The workshop was designed to highlight that "the future is not just the cloud, and private edge devices are needed", which really caught my eye. See my post Using Self-hosting Language Models So You Can Evaluate Claude Code for some of my thoughts. The talks were also cool because I only just started to realize the difference of embedded world vs server land.

Some of the talks really stood out to me.

LFM2- Designing the next-generation foundation model architecture for edge AI | Matthias Lecher (Liquid AI)

- With embedded devices there is not just a compute gap, but memory as well, which is extra important for language models.

- There is a need inference aware architectures specific to their deployment.

- We have transformers, but there are other architectures.

- They are trying to automate foundation model design.

- They created an evaluation strategy to test the designs using ExecuTorch to do actual operations on device to measure speed and memory.

- LFM2 is the architecture that fell out of the search.

Leveraging ExecuTorch as a platform for mixed reality at scale | Andrey Tovchigrechko (Meta)

- The slide had a different title - ExecuTorch: On the Path to the "Ideal Compiler". [2]

- Meta has to run software on devices like the Rayband Meta AI Glasses

- A lot of this comes down to a good compiler. ExecuTorch does this

- Hard to meet all three at once:

- Usability - reduce time to deploy.

- Performance - need to overcome the limits of vendor tools. There are varying degrees of openness in vendor tools and sometimes direct hardware access is not available.

- Portability - there are many different model types and hardware types, so there are a large number of combinations

- They created an open platform that is a library of compilation recipes as a wiki. As well as tooling that automatically picks a compilation path based on the hardware.

- This is the power of open source: each optimization method someone contributes helps everyone else.

Mamba and SSM running at the edge | Karan Goel (Cartesia)

- Context allows models to provide personal experiences. On device models require long contexts, but don't have as much memory.

- Cloud models are large and not feasible to deploy on edge devices.

- They want to move the Pareto frontier to not have to make the trade off.

- Compressing models without sacrificing quality.

- One way is distillation: take outputs from a teacher model to train a smaller model. The main take-away here is the models do not need to have the same architecture. For example, going from attention based models into mamba-based recurrent models. The easier we make this conversion, the more we can explore this solution space.

- They created Llamba for systematically scaling distillation architecture search.

Exhibitor Spot Talk: Towards A Blueprint for Open Science of Foundation Models by Zeynep Tufekci

I saw that title and was quite excited as I'm a big fan of open science. Weirdly enough the title they used on presentation day was instead Towards Foundation Models with World Intelligence. At first I thought this was a different presentation, but the same authors were listed. Bummer. Not much was said on the open science side.

The presentation was a part of the Exhibitor Spot Talks - Session 2, but I only dropped in for that one talk.

Are We Having the Wrong Nightmares About AI?

People are asking if AI going to lead to mass unemployment or extinction? She's Zeynep argues that neither of those are the right questions. Also, that there is a huge role we can all play to get us ready for the AI crisis.

Historically we get it wrong when trying to predict major technology transformations. Eg, with the steam engine people mostly compared them to a horse. [3]

Scale matters though as a destabilizing force. We're going to have generative and creative output at massive scales. Generative AI is not a form of human intelligence and not a small human on a trajectory to getting smarter. This mismatch actually makes things more difficult because we are prone to making the human comparison.

Instead, she describes it as a "plausibility engine":

- In a way models are always hallucinating, but sometimes create realistic output.

- This is powerful in a verifiable system like programming because we can check its work.

She also drew a comparison to convergent evolution. LLMs do some things the same because that is functionally a good choice for that purpose.

Currently we use certain forms of proof as shortcuts for checking things. The result is that in society we have intention "load bearing friction" and if you can make those things easy at scale that breaks our old defenses.

Proof of:

- Effort - Taking time to write a custom job cover letter which breaks gate keeping.

- Authenticity - using a video as evidence is gone.

- Accuracy - something well written no longer demonstrates expertise.

- Sincerity - genuine sentiment is replaced with sycophancy.

- Humanity - the point of art is its humanity, our shared experience.

If you remove those five proofs, it creates a demand for mass surveillance. It will "solve" the problem, but not in a way compatible with human decency and liberty. Also in the private sector there is a big misalignment: the business model. Right now companies depend on engagement driven models paid by ads. The AI driver version of this will be disastrous.

We, as people at a large AI conference need to be the ones to create solutions to these problems in a way preserves our dignity instead of trying to trick people into scrolling more. You are being offered a lot of money, but you also have a legacy.

GenAI disclosure: I used Claude to transcribe this table from a photo, but I made sure to review the contents. ↩︎

Mmmm langauge theory. ↩︎

Also, Freakonomics did a great series on horses recently that deals with the history of them in the economy. ↩︎

Member discussion