NeurIPS 2025 - Thursday Notes

Invited Talk: The Art of (Artificial) Reasoning by Yejin Choi

I caught the back half of this presentation, so I missed the technical details, but the concluding remarks were interesting. She wanted to impress a couple main ideas:

The importance of democratizing Generative AI (by transcending scaling laws):

- Ownership - AI belongs to and originates from humans and should reflect their values.

- Creation - AI is developed and governed by people worldwide, rather than a small group who can afford.

- Beneficiary - AI should serve all humans and not just a small subgroup.

We need collaboration that is:

- Implicitly, open-science and open-source community.

- Explicitly, cross-institutional, cross-boarder collaborations.

They are trying to do this with the OpenThoughts Team.

Oral 3C Position Paper Orals

This was the first year NeurIPS had a position paper track. I enjoy attending sessions like this, the ones that take a stronger moral stance. The oral session "Computational Social Science and Cultural Analytics 1" at EMNLP 2024 was similarly enjoyable. There doesn't seem to be a way to link to presentations from last year, but the pdf handbook still lists the talks:

- "We Demand Justice!": Towards Social Context Grounding of Political Texts

- Fine-Grained Detection of Solidarity for Women and Migrants in 155 Years of German Parliamentary Debates

- On the Relationship between Truth and Political Bias in Language Models

- HEART-felt Narratives: Tracing Empathy and Narrative Style in Personal Stories with LLMs

- Outcome-Constrained Large Language Models for Countering Hate Speech

Anyways, I really appreciated this session:

Position: If Innovation in AI systematically Violates Fundamental Rights, Is It Innovation at All?

Talked about the Collingridge dilemma - a control paradox in regulating new technologies:

- Regulate too early: change is easy, but we don't know enough to intervene correctly.

- Regulate too late: risks are higher, but change is very costly.

A prime example is social media. We could have changed it early, but couldn't imagine the impact yet. Now, due to corporate entrenchment social media is difficult to change,

AI is not God-given, we are designing it. We can do better." - Professor Carissa Veliz

Instead, we need something better. The old paradigm for managing change is based in management and economics. The new paradigm is responsible innovation which is:

- Guided by democratic values.

- Accountable to society.

- Responsive to social needs.

We are dealing with systemic failures like bias & discrimination. The speaker gave the EU AI Act as a potential solution:

- Risk-based approach

- Future-proof

- Inovation catalyst

Personally I'm torn on this. The EU has had some great policies like the GDPR, but it seems they are as prone to capture as we are in the US. The continued push for Chat Control is a great example of this. See a recent write up by the EFF.

One parting thought that was really impactful is that "rights violations != to progress".

No remedies, no rights.

More effort is needed to protect pedestrian privacy in the era of AI

The speaker couldn't go to the presentation due to travel difficulties, but they did provide a recording of their talk.

"we all have human bodies and need to move through the world"

Current methods for protecting pedestrian privacy are insufficient:

- People are recorded by sensors like cameras without their consent.

- Recordings make their way into public datasets for training models, also without their consent.

- It's possible for malicious users to recover pedestrian information from these datasets.

- People may feel uncomfortable in public spaces, leading to a loss of trust.

There is some regulatory support for consent in the GDPR and California has the Consumer Privacy Act (CCPA). However, since it's impossible to get consent from every person in a public space, companies will not and take the risk of having to pay fees in court instead.

If we could automatically anonymize pedestrians, this would help, but current methods are insufficient:

- Face anonymization (like in Google Maps) alone is inadequate because it can be reversed. Also, it is insufficient at protecting against other techniques like gait analysis.

- Generating fake faces and bodies may cause copyright issues, and there is no systematic analysis of how useful this actually is for privacy protection.

To better protect privacy a method should:

- Not reduce the utility of the dataset.

- Ensure temporal consistency.

- Protect the privacy for multiple pedestrians, not just the nearest one.

- Be resistant to mode attacks.

We need something like full body and gait anonymization. Additionally, we need a benchmark for confirming how well these new techniques will work.

See Benn Jordan's videos on street cameras for some wonderful commentary on this topic.

Real-Time Hyper-Personalized Generative AI Should Be Regulated to Prevent the Rise of "Digital Heroin"

Real time generative AI content is something we need to regulate right away. Short form social media already shows addictive dynamics and personalized AI content is rapidly improving.

Traditionally creators upload content, and the system optimizes which content to show each user. That alone has already been show to be addictive. Now, generation latency is going down, making real time generation feasible at scale. Companies like Google and OpenAI are already releasing tools for watching AI generated content.

Personalization and fine-tuning are getting better. This all means platforms can generate content in real time just for you. They can show you a video, see your reaction, and adjust as needed.

Addiction is an optimizer's shortcut to maximizing engagement.

So the speaker proposed a Designated Addictive System (DAS) label for services with a primary function to maximize user engagement. DAS should be required to submit a pre-market safety case showing mitigation with regular, transparent, and public audits after release. Some example mitigation are:

- Friction-by-design: autoplay, infinite scroll off, slow mode, enforced breaks.

- Age protections: limited access for teens and children.

- Liability and enforcement: dark-pattern bans, data subpoenas, revenue-linked penalties.

Finally, the DAS label can sit on top of existing acts like the UK Online Safety Act.

Ultimately we should compare GenAI content regulations to how we handle seat belts, cigarettes and alcohol, the UK sugar tax, etc. Also, the impetus should be on the platforms and not the users and families.

Also, as is tradition, the talk calls for an addiction risk benchmarks.

Panel: Emergent Stories: AI Agents and New Narratives

Somewhat in opposition to the moral of the previous session, this panel was about using AI to generate content in media. However, in this case the speakers were exploring mixed media.

Back in 2017 they only had a small room for their workshop. Now they have their own track and a packed double room.

There were five speakers (all poster presenters)

- Parag Mital (Emergentic)

- Vanessa Rossa

- Gottfried Haider and Jie Zhang (LLMscape)

- Manuel Flurin Hendry

Each had a chance to show their work, then sat down for audience questions. I enjoyed just sitting and listening to the panel, so I didn't take many notes.

Posters

The center has space to hold 900 posters. There were two poster sessions a day, for three days. That's a lot of posters! Here are two that I thought were interesting:

Collective Bargaining in the Information Economy Can Address AI-Driven Power Concentration:

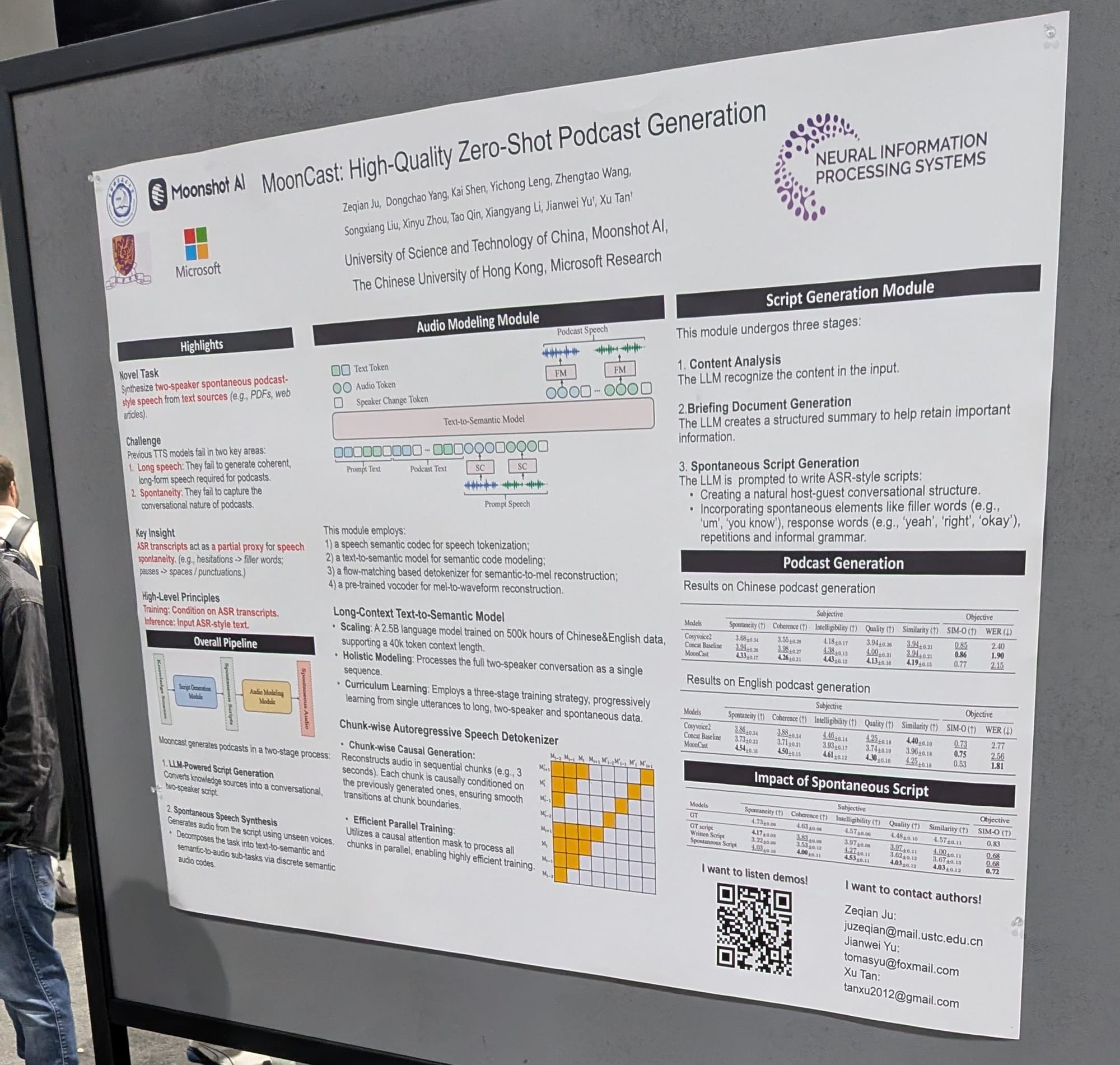

MoonCast: High-Quality Zero-Shot Podcast Generation:

This one caught my eye because I have strong opinions about the podcast ecosystem.

As I mentioned in my post about what podcast I am listening to I mentioned that I like the Economist Audio Edition because its people reading the Weekly Edition word for word. Sadly in November they announced they would start using AI generated voices:

However, audio versions of a minority of our articles, including those published in response to breaking news, are now created using a third-party text-to-speech AI service. This AI audio has not been reviewed prior to publication. We make no representations or warranties in relation to this content, its accuracy or completeness, and we disclaim all liability regarding its receipt, content and use.

I feel mixed about this. On the plus side they say:

This is designed to fill the gap before the human-narrated version from the weekly edition is available... Please note that this AI narration is only temporary and does not replace human narration. The AI-narrated audio will be automatically updated with the full human recording around 9 pm GMT on Thursdays.

This will work well if you use their app, but I like the open RSS based podcast ecosystem and its unclear how the generation will work in that case.

Invited Talk: On the Science of “Alien Intelligences”: Evaluating Cognitive Capabilities in Babies, Animals, and AI by Melanie Mitchell

The title is a nod to this quote:

"Something is beginning to happen that was not expected even a few years ago. A threshold was reached, as if a space alien suddenly appeared that could communicate with us in an eerily human way.…Some aspects of their behavior appear to be intelligent, but if it's not human intelligence, what is the nature of their intelligence?" - Terrence Sejnowski

[1]

She argues benchmark performance often does not predict real world capabilities. So she has tried to combine several similar discussions from others into six principles:

- Be aware of your bias towards anthropomorphism. See the Eliza Effect.

- Be skeptical of hypothesis. Are there other strategies that might have cause the desired behavior?

- Analyze failure types because they give more insight than the successes.

- Design novel variations to test robustness and generalization.

- Consider performance vs competence, does the system possess the capacity to perform a task that it has figured out how to do.

- Replicate and build on others results.

Killjoy explanations and negative result publications get less publication, but are still important.

In some cases, we want AI systems to think differently than us, eg AlphaFold's protein search. In other cases we need AI to understand the world like us. Determining the nature of "alien intelligence" takes substantial rigor and creativity. We need that in AI evaluations.

As well as Baby steps in evaluating the capacities of large language models by Michael C. Frank ↩︎

Member discussion