NeurIPS 2025 - Friday Notes

Oral 5D Application 3

WebGen-Bench: Evaluating LLMs on Generating Interactive and Functional Websites from Scratch

I only caught the very tail end of the Q&A for this one.

QoQ-Med: Building Multimodal Clinical Foundation Models with Domain-Aware GRPO Training

This work is especially important in emergency departments at hospitals and under served countries. Doctors do not have much information or time to make a diagnosis. The hope is machine learning can help make a quick initial diagnosis that can then assist doctors to make the final diagnosis.

However, traditional classifier models are limited and LLM based techniques struggle with multimodal inputs. They also have poor interpretability in a way that is useful for medical practitioners.

So the labs goal was to develop a multi model LLM that can reason across many domains. The bulk of the work went into the training techniques.

Reasoning can help with verifying a models logic and they especially wanted reasoning on time series data. So the reward setup for the reinforcement learning was:

- A binary reward for accuracy.

- Semantic match to a ground truth.

- Rewarded for a response if the response is at least 100 tokens to get a nice middle ground of not too short and not too long.

The ICML25 CLIMB data set was used which has 4.57 annotated samples at 19tb. One of the largest public data sets for clinical diagnosis. They released a public repo to provide a script to do all the dataset downloading and processing.

They have a high heterogeneity of the inputs. GRPO treat all samples equally, which means the models focuses too much on the easy and abundant samples. Eg a chest x ray is handled easily, but ultrasound is not. So they created domain aware GRPO (DRPO) :

- Upscales scarce and hard questions, downscale easy abundant via hierarchical clustering by domains using the Elbow method.

- Performed better than both open source and closed models.

- Only adds less than a percent to the total training time.

Additionally, they worked with medical professionals to annotate reasoning samples with the degree of relevance. Their work is available in the repo MIRL: Multisensory Intelligence Reinforcement Learning for LLMs.

NOVA: A Benchmark for Rare Anomaly Localization and Clinical Reasoning in Brain MRI

They want models to generalize on inputs that are not seen in training. LLMs can ground semantic knowledge for disease which works well for what they have seen before.

In healthcare though, doctors frequently see new problems. Eg 100M people in the US alone suffer from neurological disorders. Of those, up to 20M suffer from a "rare" disease, with more than 2000 distinct classes that a model would not be able to see.

What we want is open-world recognition. However when you evaluate these medical models, it collapses because medical benchmarks mimic a closed-world setting. This is not good for checking how models generalize. The cant handle when a case comes in that is different than what was expected. A brain scan anomaly could just be a result of age, or could be an early sign of Alzheimers.

NOVA is a benchmark for open-world generalizations and reasoning in vision-language models. It provides real world samples, rather than nicely cleaned up ones.

Eurorad is a system that allows doctors to upload bizarre cases they find, so it by nature includes rare cases. They had doctors mark up the images up with bounding boxes, taking multiple bounds to find agreement. Captioned were added to provide details in a clinically meaningful way. Also doctors are allowed to ask for follow up information and additional tests.

Compared to previous benchmarks, Qwen's performance dropped by 65% on NOVA.

The dataset is available on huggingface.

More posters. So many posters.

I took way too many pictures of posters, but there are a few I would like to share.

The first is Merlin L48 Spectrogram Dataset. This one is from my alma mater Umass Amherst. Also, I monitor birds at home using BirdNetGo which is built on the eBird dataset. I chatted with the presenter and there were some fun problems they are dealing with around labeling.

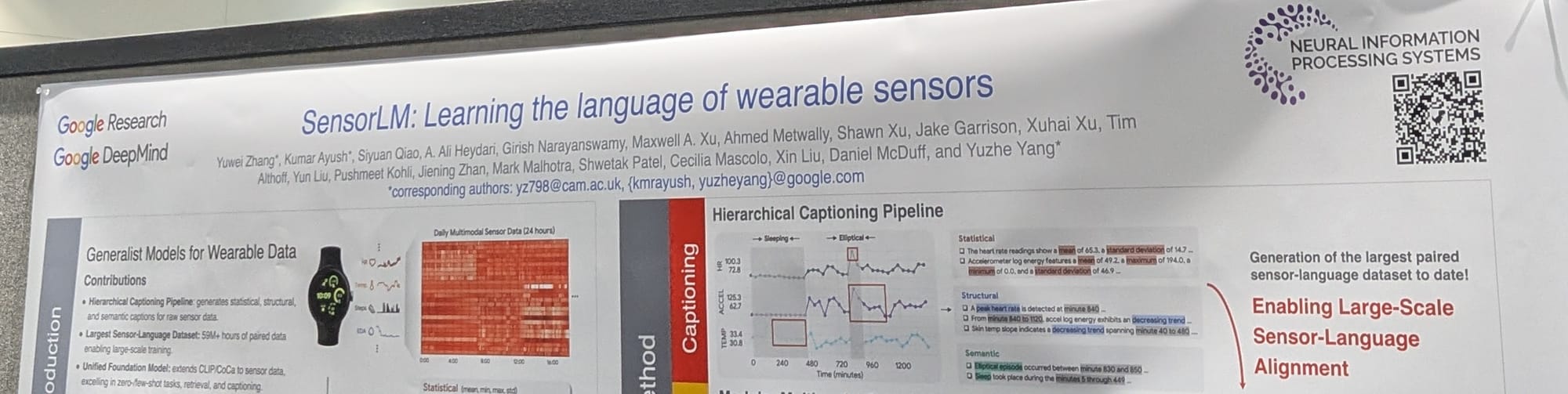

The next is SensorLM: Learning the Language of Wearable Sensors out of Google. I really like wearable technology and had worked on personal projects around it in the past.

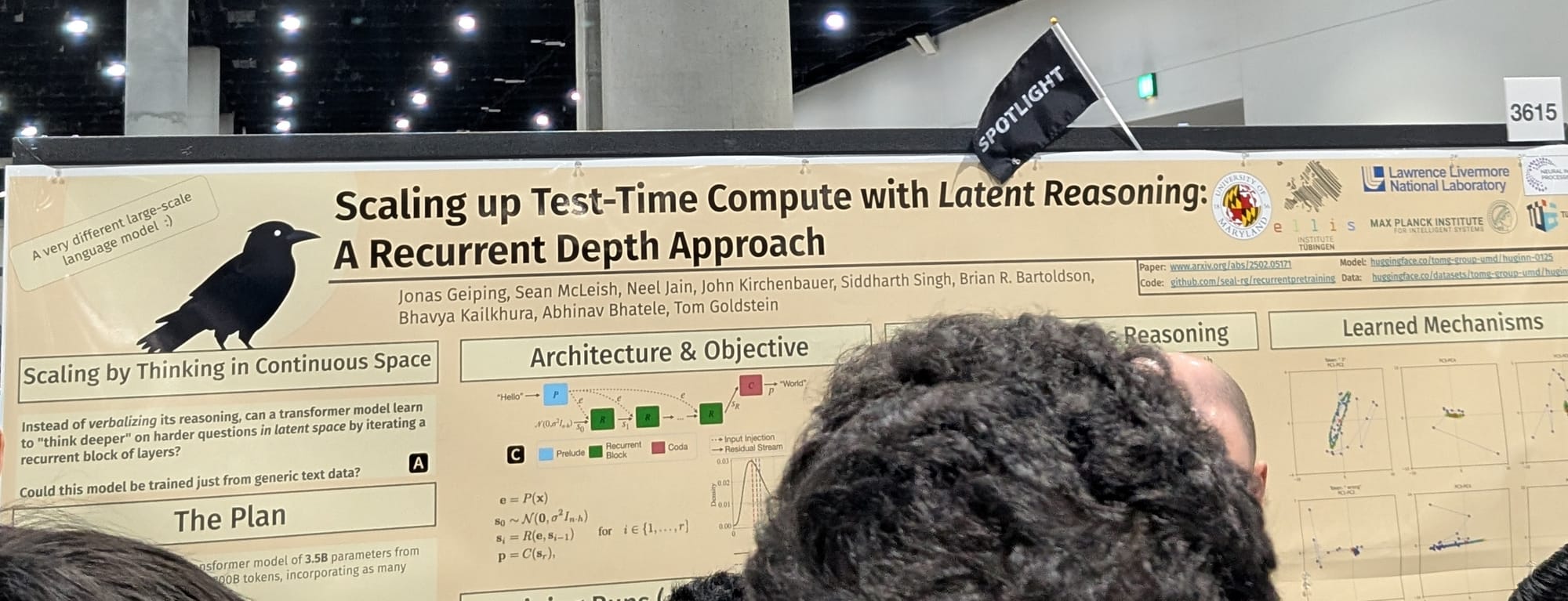

Yes I was drawn to this one because of the corvid, but I also noticed the spotlight flag.I got to talk with one of the presenters about their work Scaling up Test-Time Compute with Latent Reasoning: A Recurrent Depth Approach. They noticed my employers name on my badge and said that had spoke with some of us at last years NeuroIPS. I definitely want to read the paper for this one.

Member discussion